Research conducted during my time as an Assistant Professor at Durham University, UK.

Insights from the Use of Previously Unseen Neural Architecture Search Datasets

The boundless possibility of neural networks which can be used to solve a problem – each with different performance – leads to a situation where a Deep Learning expert is required to identify the best neural network. This goes against the hope of removing the need for experts. Neural Architecture Search (NAS) offers a solution to this by automatically identifying the best architecture. However, to date, NAS work has focused on a small set of datasets which we argue are not representative of real-world problems.

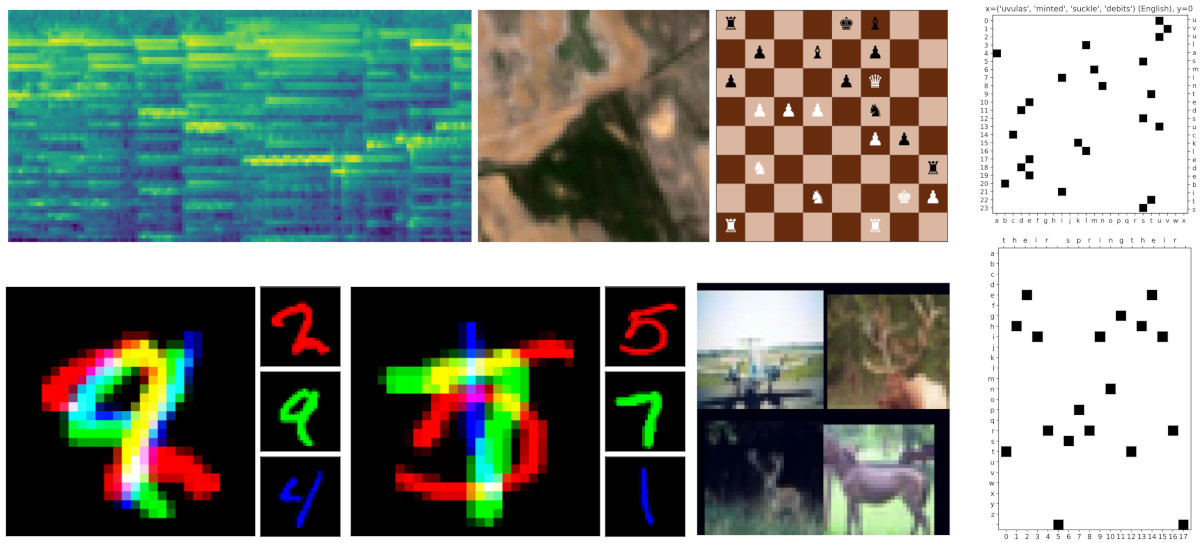

We introduce eight new datasets created for a series of NAS Challenges: AddNIST, Language, MultNIST, CIFARTile, Gutenberg, Isabella, GeoClassing, and Chesseract. These datasets and challenges are developed to direct attention to issues in NAS development and to encourage authors to consider how their models will perform on datasets unknown to them at development time. We present experimentation using standard Deep Learning methods as well as contemporary state-of-the-art NAS method and an analysis of the best results from challenge participants. For further details, please refer to the paper.

Proposed Datasets

We propose a set of new unseen datasets specifically designed for NAS challenges. These datasets have been used in the Unseen Data NAS Challenge. These datasets include:

- AddNIST: A dataset with images consisting of MNIST digits in three channels, where the class label is the sum of the digits.

- Language: Encodes words from ten languages into images, requiring the model to identify the language based on letter frequency.

- MultNIST: Similar to AddNIST but uses the product of the digits modulo 10 for class labels.

- CIFARTile: Images tiled from CIFAR-10 images, with labels representing the number of unique classes in the tile.

- Gutenberg: Uses sequences of words from literary works encoded into images, with the task of identifying the author.

- Isabella: Musical recordings converted into spectrograms, classified by the era of composition.

- GeoClassing: Satellite images labeled by the country they represent.

- Chesseract: Chess board positions labeled by the game outcome.

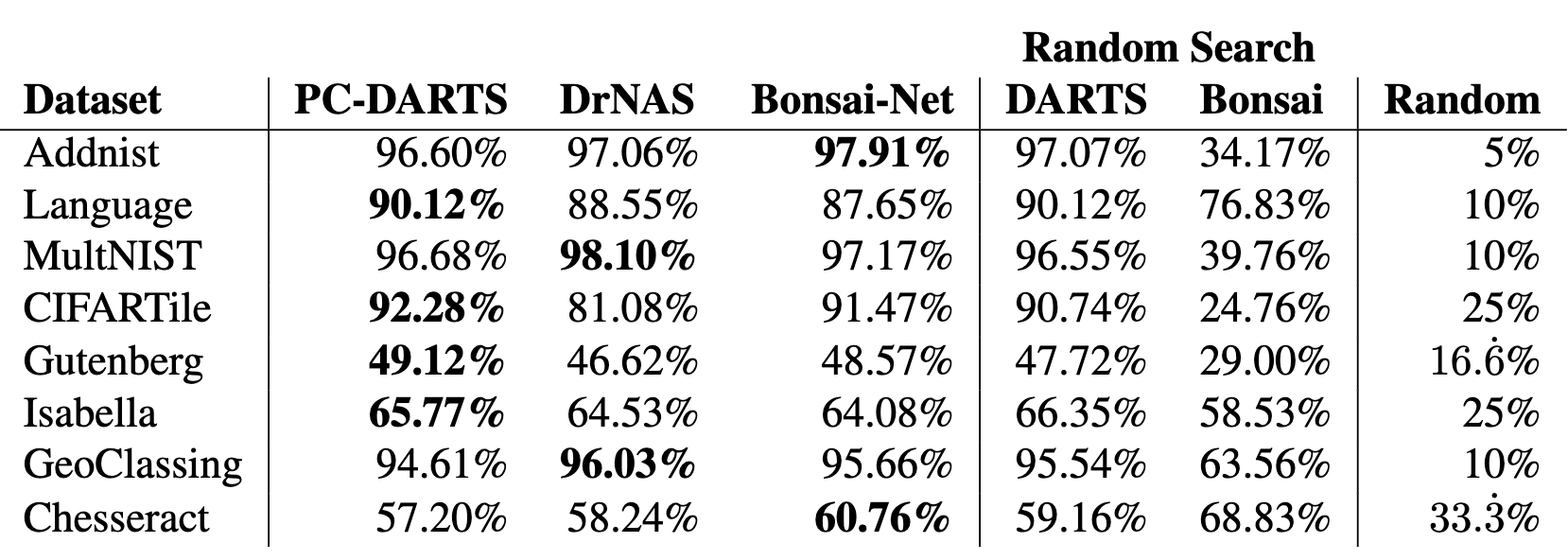

Experimental Results

Baseline results were obtained using common CNN architectures such as ResNet-18, AlexNet, VGG16, and DenseNet. These results provide a reference point for the performance of NAS methods on the new datasets. We also provide results of seminal NAS methods on our proposed datasets, found below. For further details, please refer to the paper.

Technical Implementation

All implementation is carried out using Python, PyTorch and TensorFlow.

Supplementary Video

For further details of the proposed datasets and the results, please watch the video created as a presentation of the paper.

Publication

Paper: arXiv: 2404.02189

Citation:

Rob Geada, David Towers, Matthew Forshaw, Amir Atapour-Abarghouei, and A. Stephen McGough. "Insights from the Use of Previously Unseen Neural Architecture Search Datasets", in IEEE/CVF Conf. Computer Vision and Pattern Recognition (CVPR), IEEE, 2024.

BibTeX